After speaking with James Field on 11/05/2016, he suggested it would be good to consider and begin experimenting with the physical output in combination with a visual output. James suggested JavaScript may be the best way to achieve this to create a dynamic animation that could respond to the levels of an audio input. This could be a computer capturing the sound of the choir installation, then capturing the level to give a value (i.e. between 0 and 100) that then alters/begins an animation. This animation could potentially include opacity, colour value, position, scale etc. Initially for my Project 2 hand-in this can be a very basic solution that can be developed further into a more complex solution for the final Project 3 outcome.

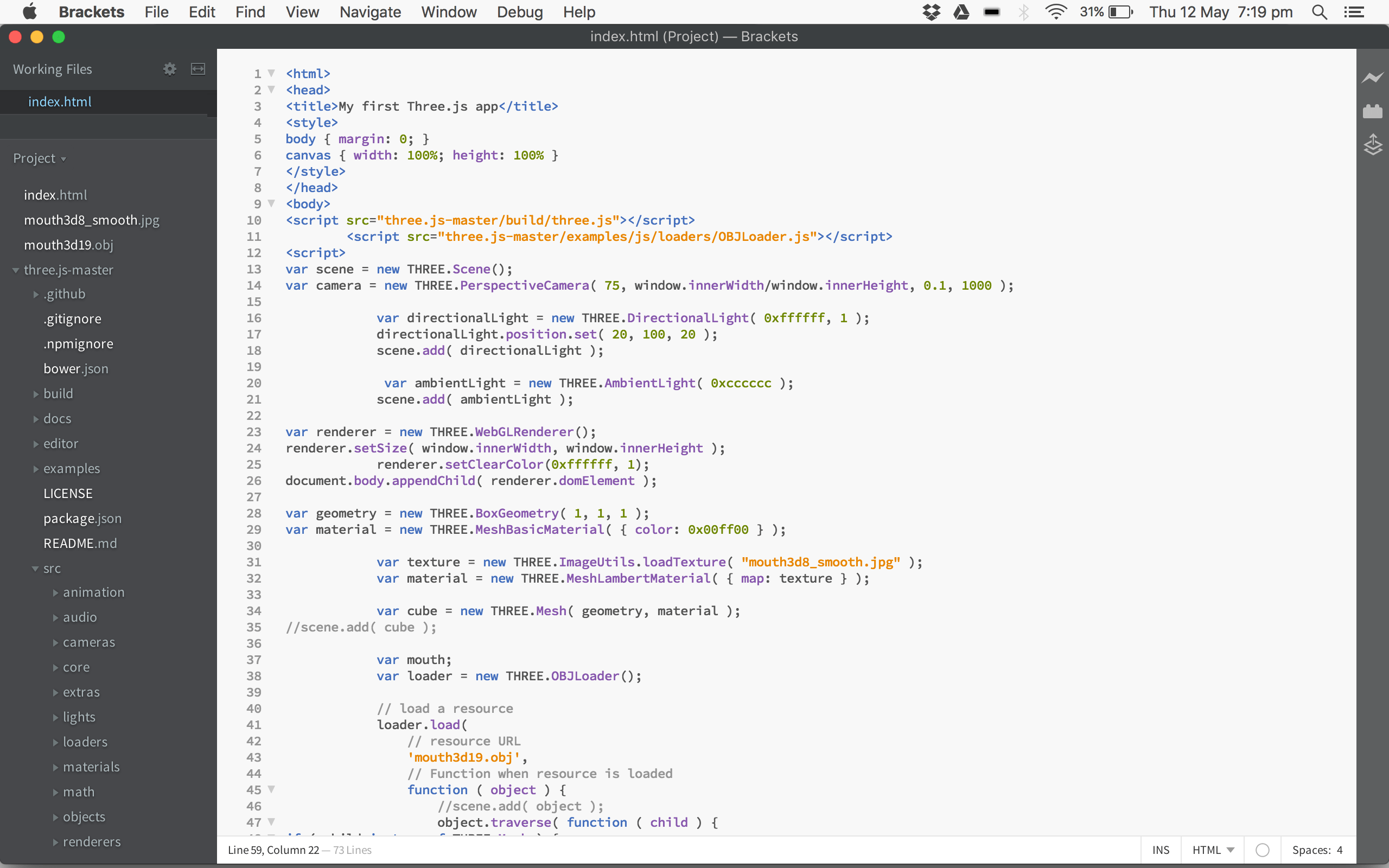

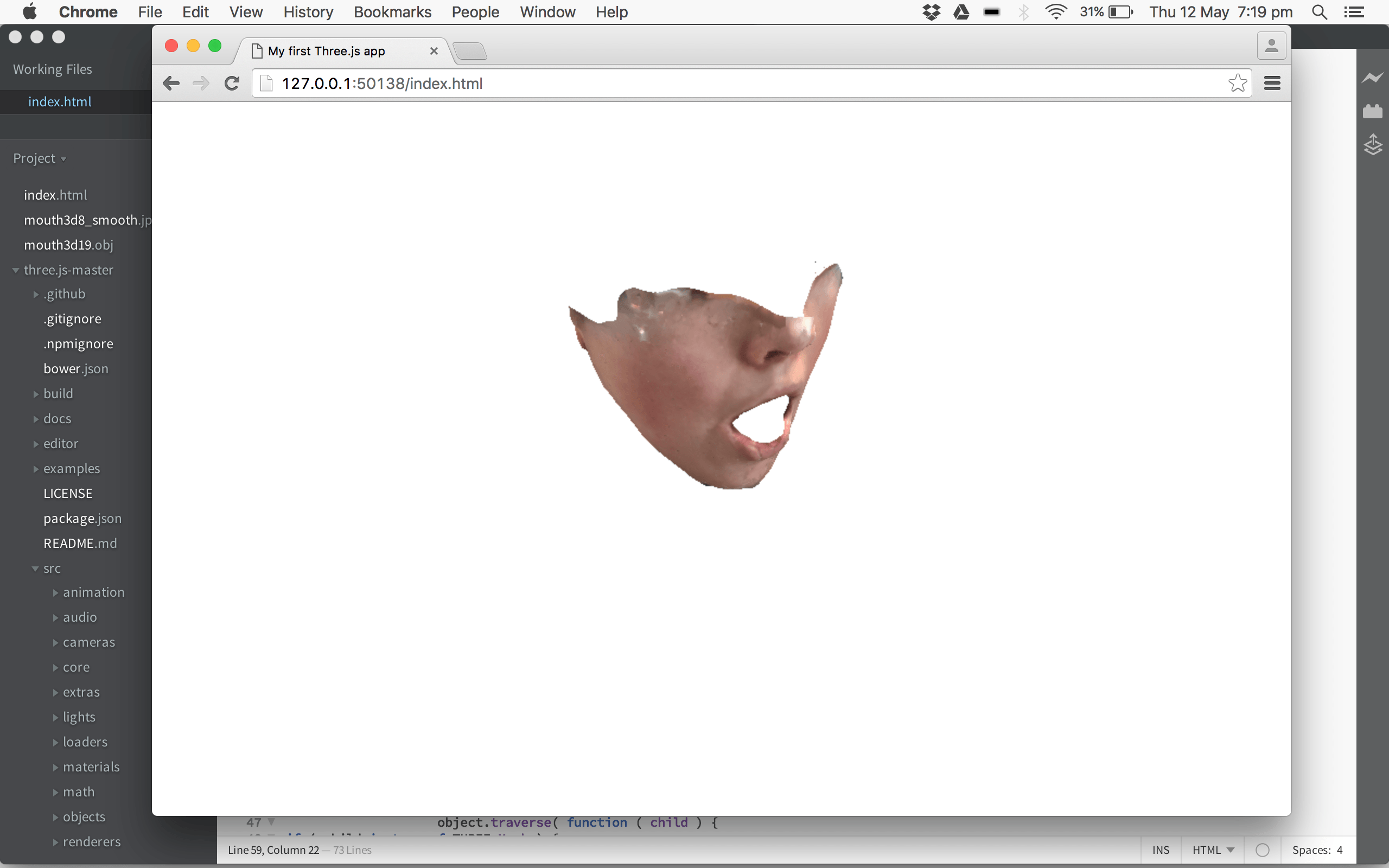

I initially tried to get to grips with JavaScript thanks to some links James sent me, I began with the simple creating a scene tutorial here http://threejs.org/docs/index.html#Manual/Introduction/Creating_a_scene. This was useful to understand how the different sections of code work and most importantly how scripts are needed in order to load in different files/aspects. Next I attempted instead of the cube to have a .obj file load, I didn’t get very far on this myself due to my lack of knowledge of JavaScript. James helped me out and got me started, explaining the process of adding the script for an obj loader and then loading the actual file and texture. After I got to grips with this I tried it out for myself and added a rotation element to begin animation experimenting. The effect is interesting and I think with the dynamic aspects added it can be very effective.

Here is the code:

<html>

<head>

<title>My first Three.js app</title>

<style>

body { margin: 0; }

canvas { width: 100%; height: 100% }

</style>

</head>

<body>

<script src=”three.js-master/build/three.js”></script>

<script src=”three.js-master/examples/js/loaders/OBJLoader.js”></script>

<script>

var scene = new THREE.Scene();

var camera = new THREE.PerspectiveCamera( 75, window.innerWidth/window.innerHeight, 0.1, 1000 );

var directionalLight = new THREE.DirectionalLight( 0xffffff, 1 );

directionalLight.position.set( 20, 100, 20 );

scene.add( directionalLight );

var ambientLight = new THREE.AmbientLight( 0xcccccc );

scene.add( ambientLight );

var renderer = new THREE.WebGLRenderer();

renderer.setSize( window.innerWidth, window.innerHeight );

renderer.setClearColor(0xffffff, 1);

document.body.appendChild( renderer.domElement );

var geometry = new THREE.BoxGeometry( 1, 1, 1 );

var material = new THREE.MeshBasicMaterial( { color: 0x00ff00 } );

var texture = new THREE.ImageUtils.loadTexture( “mouth3d8_smooth.jpg” );

var material = new THREE.MeshLambertMaterial( { map: texture } );

var cube = new THREE.Mesh( geometry, material );

//scene.add( cube );

var mouth;

// instantiate a loader

var loader = new THREE.OBJLoader();

// load a resource

loader.load(

// resource URL

‘mouth3d19.obj’,

// Function when resource is loaded

function ( object ) {

object.traverse( function ( child ) {

if ( child instanceof THREE.Mesh ) {

mouth = object;

child.material = material;

//child.material.map = texture;

object.position.y = – 1;

scene.add( object );

}

} );

}

);

camera.position.z = 5;

var render = function () {

requestAnimationFrame( render );

mouth.rotation.x += 0.05;

mouth.rotation.y += 0.05;

renderer.render(scene, camera);

};

render();

</script>

</body>

</html>

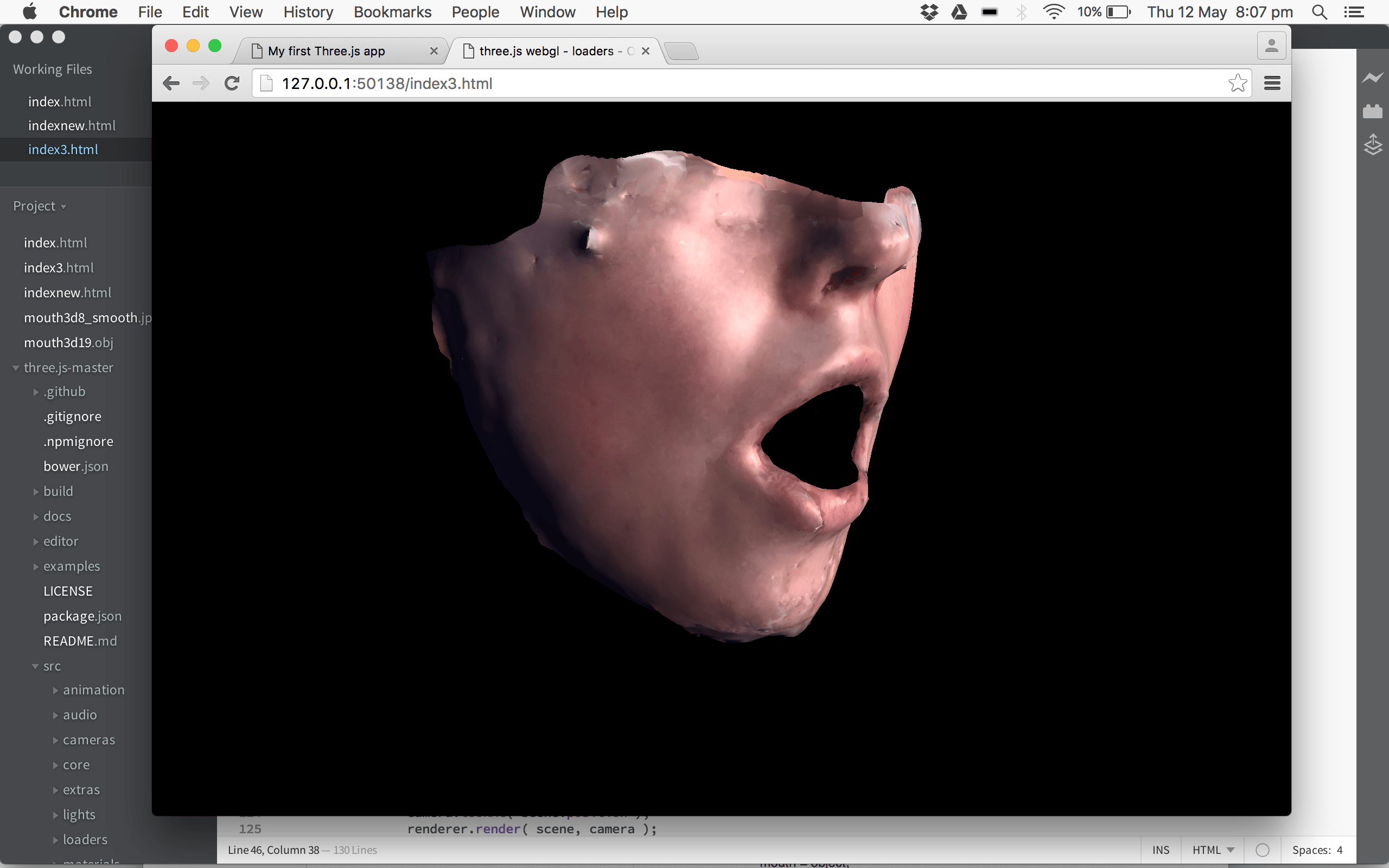

Next I attempted to follow a similar idea to this example: http://threejs.org/examples/#webgl_loader_obj. This simply reacts to the mouse, with the position altering the scale of the .obj file. This works really well with the mouth and gave it quite a creepy effect with the black background.

Here’s the code:

<!DOCTYPE html>

<html lang=”en”>

<head>

<title>three.js webgl – loaders – OBJ loader</title>

<meta charset=”utf-8″>

<meta name=”viewport” content=”width=device-width, user-scalable=no, minimum-scale=1.0, maximum-scale=1.0″>

<style>

body {

font-family: Monospace;

background-color: #000;

color: #fff;

margin: 0px;

overflow: hidden;

}

#info {

color: #fff;

position: absolute;

top: 10px;

width: 100%;

text-align: center;

z-index: 100;

display:block;

}

#info a, .button { color: #f00; font-weight: bold; text-decoration: underline; cursor: pointer }

</style>

</head>

<body>

<script src=”three.js-master/build/three.js”></script>

<script src=”three.js-master/examples/js/loaders/OBJLoader.js”></script>

<script>

var container;

var camera, scene, renderer;

var mouseX = 0, mouseY = 0;

var windowHalfX = window.innerWidth / 2;

var windowHalfY = window.innerHeight / 2;

init();

animate();

function init() {

container = document.createElement( ‘div’ );

document.body.appendChild( container );

camera = new THREE.PerspectiveCamera( 45, window.innerWidth / window.innerHeight, 1, 2000 );

camera.position.z = 5;

// scene

scene = new THREE.Scene();

var ambient = new THREE.AmbientLight( 0x101030 );

scene.add( ambient );

var directionalLight = new THREE.DirectionalLight( 0xffeedd );

directionalLight.position.set( 0, 0, 1 );

scene.add( directionalLight );

// texture

var manager = new THREE.LoadingManager();

manager.onProgress = function ( item, loaded, total ) {

console.log( item, loaded, total );

};

var texture = new THREE.Texture();

var onProgress = function ( xhr ) {

if ( xhr.lengthComputable ) {

var percentComplete = xhr.loaded / xhr.total * 100;

console.log( Math.round(percentComplete, 2) + ‘% downloaded’ );

}

};

var onError = function ( xhr ) {

};

var loader = new THREE.ImageLoader( manager );

loader.load( ‘mouth3d8_smooth.jpg’, function ( image ) {

texture.image = image;

texture.needsUpdate = true;

} );

// model

var loader = new THREE.OBJLoader( manager );

loader.load( ‘mouth3d19.obj’, function ( object ) {

object.traverse( function ( child ) {

if ( child instanceof THREE.Mesh ) {

child.material.map = texture;

}

} );

object.position.y = – 1;

scene.add( object );

}, onProgress, onError );

//

renderer = new THREE.WebGLRenderer();

renderer.setPixelRatio( window.devicePixelRatio );

renderer.setSize( window.innerWidth, window.innerHeight );

container.appendChild( renderer.domElement );

document.addEventListener( ‘mousemove’, onDocumentMouseMove, false );

//

window.addEventListener( ‘resize’, onWindowResize, false );

}

function onWindowResize() {

windowHalfX = window.innerWidth / 2;

windowHalfY = window.innerHeight / 2;

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize( window.innerWidth, window.innerHeight );

}

function onDocumentMouseMove( event ) {

mouseX = ( event.clientX – windowHalfX ) / 2;

mouseY = ( event.clientY – windowHalfY ) / 2;

}

//

function animate() {

requestAnimationFrame( animate );

render();

}

function render() {

camera.position.x += ( mouseX – camera.position.x ) * .05;

camera.position.y += ( – mouseY – camera.position.y ) * .05;

camera.lookAt( scene.position );

renderer.render( scene, camera );

}

</script>

</body>

</html>