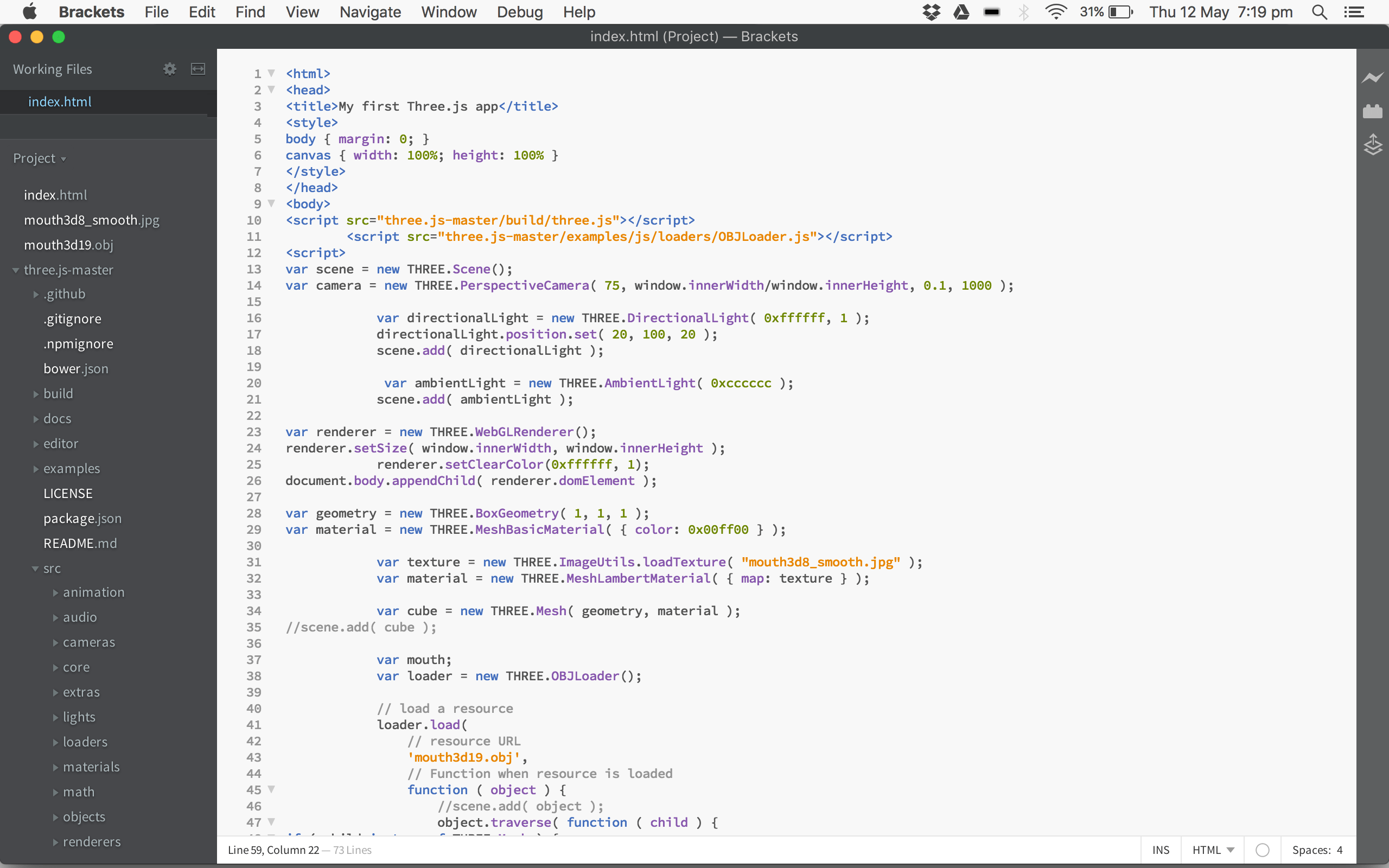

After a tutorial with James Field on 19/05/2016 we went through how to use JavaScript to access and read the level of a laptop’s internal microphone and then make this level affect an object’s animation. James set me up with the code that initially reads audio input level using getUserMedia and then the code mathematically analyses this to show a level value:

var max_level_L = 0;

var old_level_L = 0;

var level;

window.AudioContext = window.AudioContext || window.webkitAudioContext;

navigator.getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia;

var audioContext = new AudioContext();

navigator.getUserMedia(

{audio:true, video:true},

function(stream){

var microphone = audioContext.createMediaStreamSource(stream);

var javascriptNode = audioContext.createScriptProcessor(1024, 1, 1);

microphone.connect(javascriptNode);

javascriptNode.connect(audioContext.destination);

javascriptNode.onaudioprocess = function(event){

var inpt_L = event.inputBuffer.getChannelData(0);

var instant_L = 0.0;

var sum_L = 0.0;

for(var i = 0; i < inpt_L.length; ++i) {

sum_L += inpt_L[i] * inpt_L[i];

}

instant_L = Math.sqrt(sum_L / inpt_L.length);

max_level_L = Math.max(max_level_L, instant_L);

instant_L = Math.max( instant_L, old_level_L -0.008 );

old_level_L = instant_L;

level = instant_L/max_level_L / 10;

}

},

function(e){ console.log(e); }

);

Once we have this value we can assign the level as a variable, then in turn tell the object to animate accordingly. This used the following in order to do so, here we needed to make sure that the animation wasn’t trigged until both the object had loaded and the level was reading a number greater than 0:

var render = function () {

requestAnimationFrame( render );

if ( mouth ){

if(level > 0){

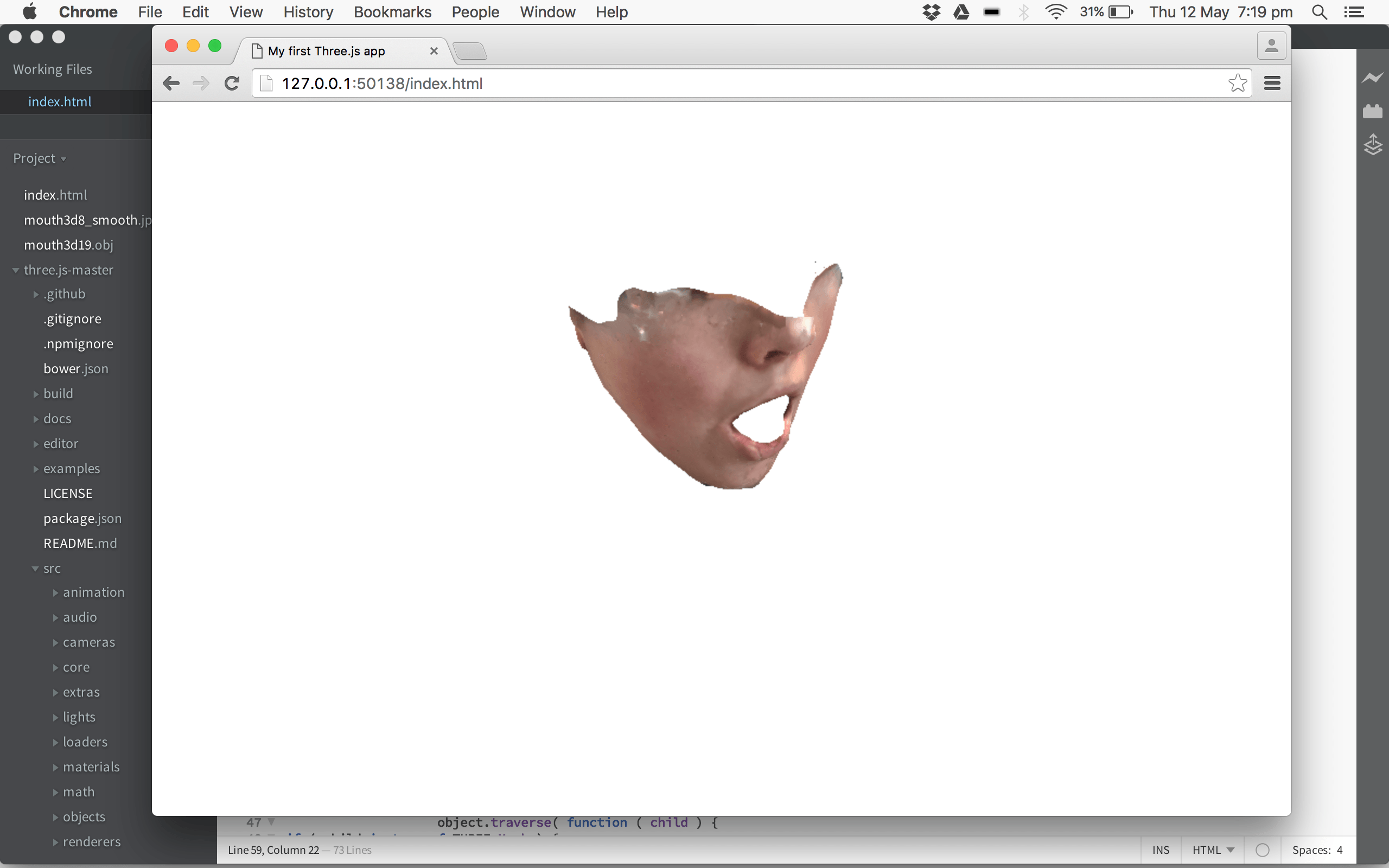

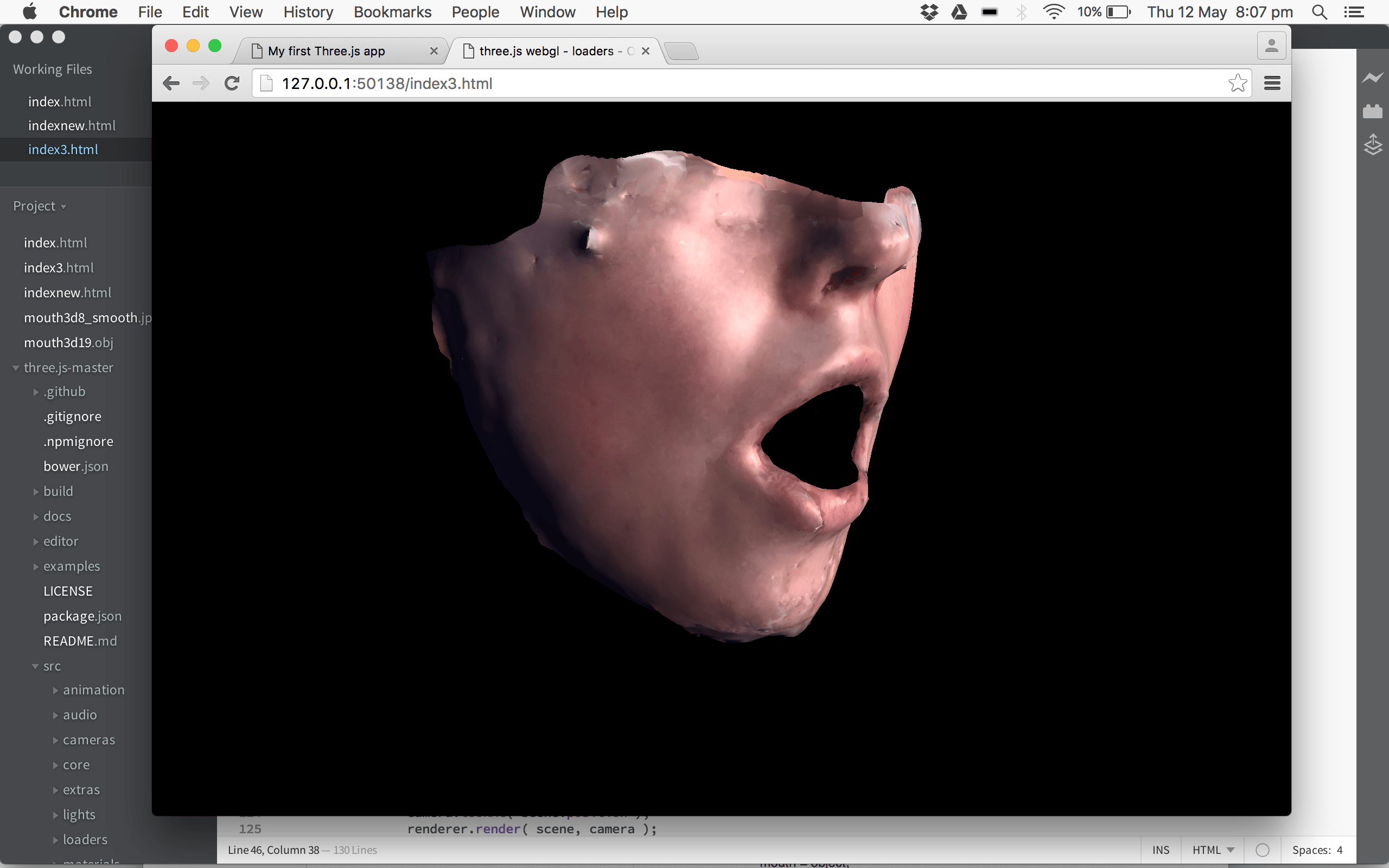

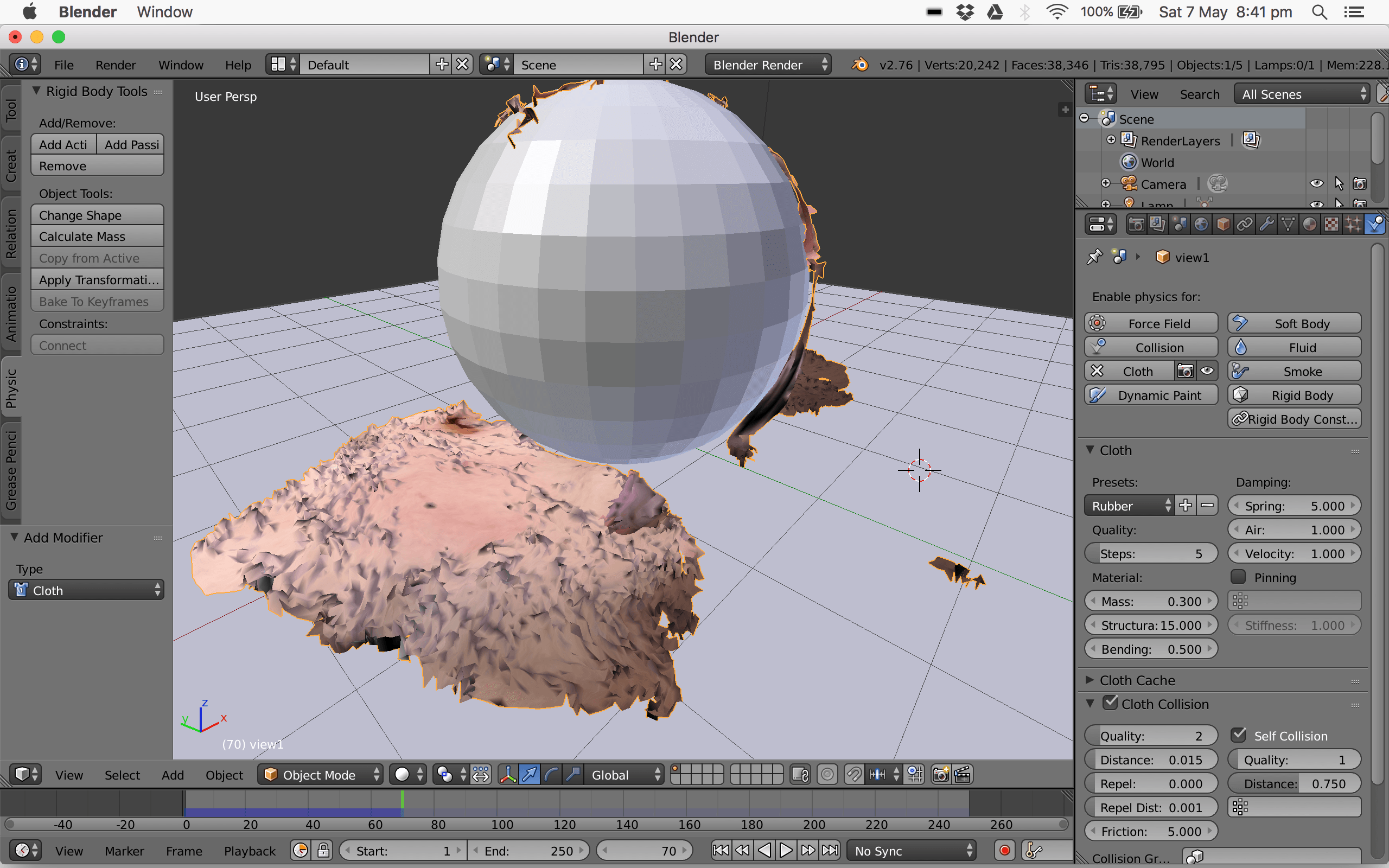

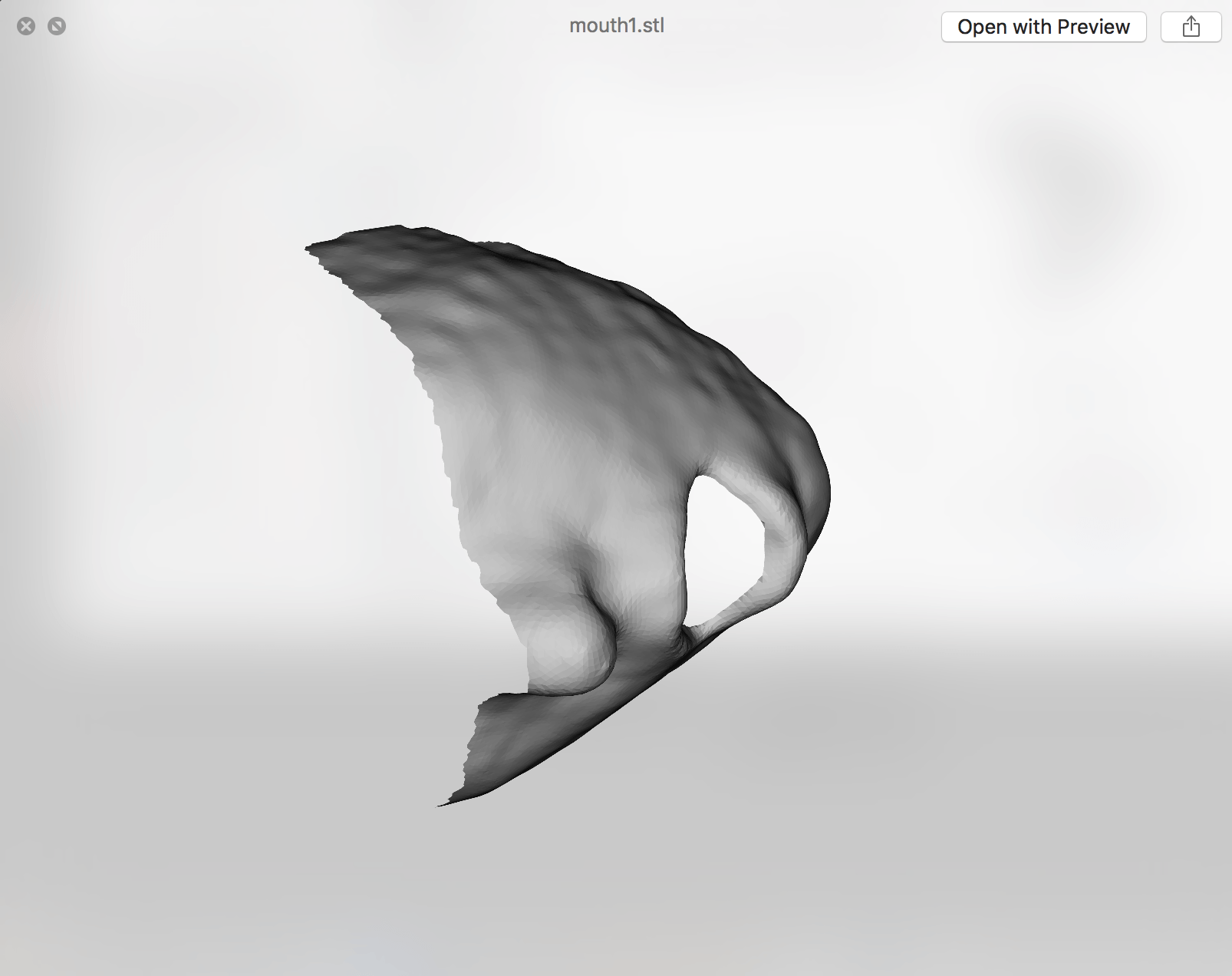

Then I could begin to animate the object in different ways, initially I have kept this very basic using transformation tools rotation, scale and opacity. The rotation code states that the level is multiplied by 90 in order to make the rotation of the object turn the full 360 degrees. If the level is multiplied by 20 then the face only rotates 90 degrees and as such the movement isn’t quite large enough to be noticeable through audio input. The scale was tested using both x (multiplied by 20) and x + y (multiplied by 20). This works better with the object only being scaled by the x OR y-axis as both together makes the animation awkward and throws out the placement of the initial object. The opacity was slightly trickier to implement, as we are dealing with a 3D object the opacity is as such defines by the material/texture. For this reason we cannot purely say model.opacity, we must first change the variable material to include a transparency using transparent = true. Then we can say material.opacity = level and in this case was again multiplied by 20 to be noticeable and produce an effective reduction back to transparent. The code for each was set up as followed:

mouth.rotation.y = level * 90; (for face all the way round)

mouth.rotation. y = level * 20; (for face half way round)

mouth.scale.x = level * 20;

var material = new THREE.MeshLambertMaterial( { map: texture, transparent: true} );

material.opacity = level * 20;

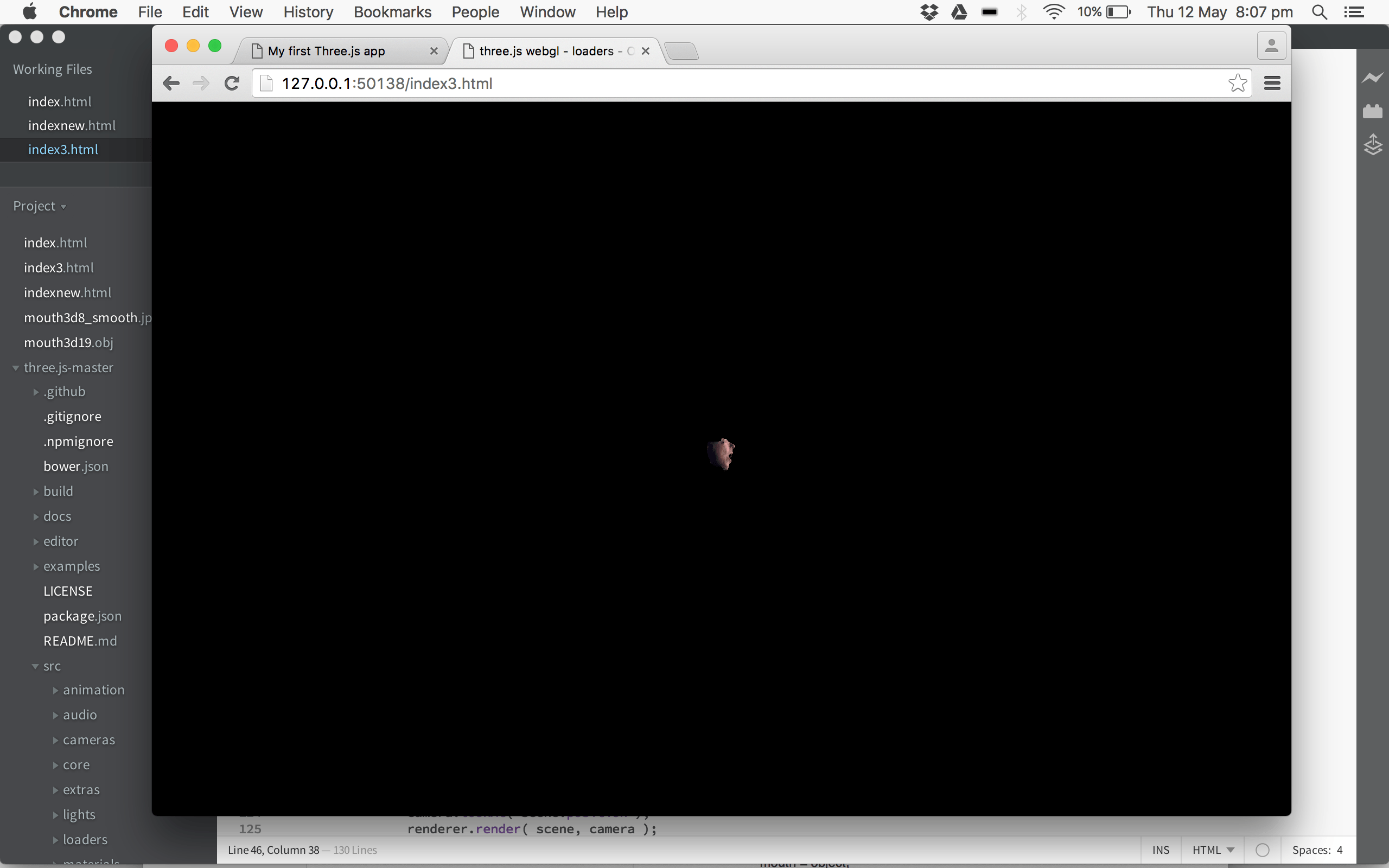

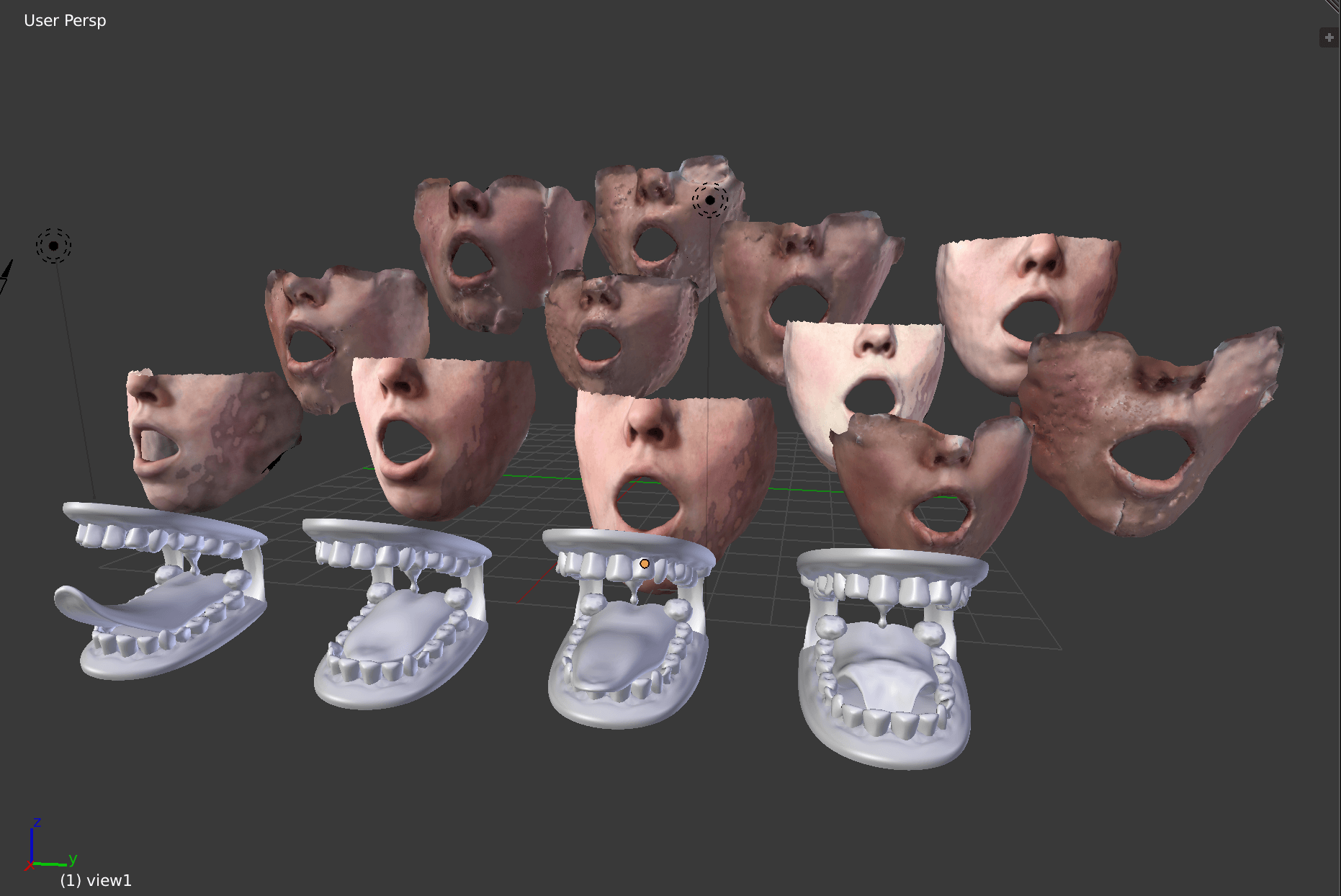

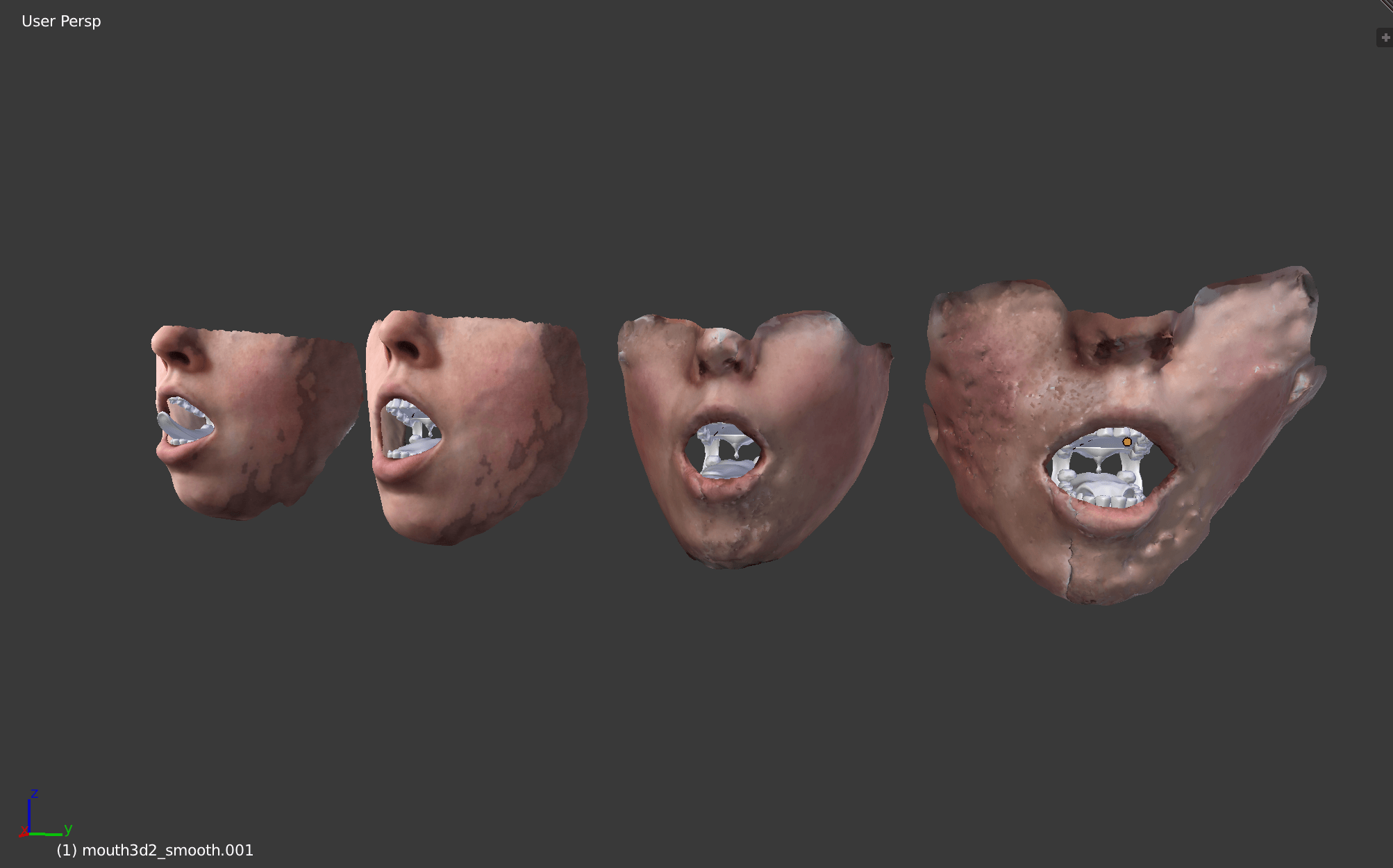

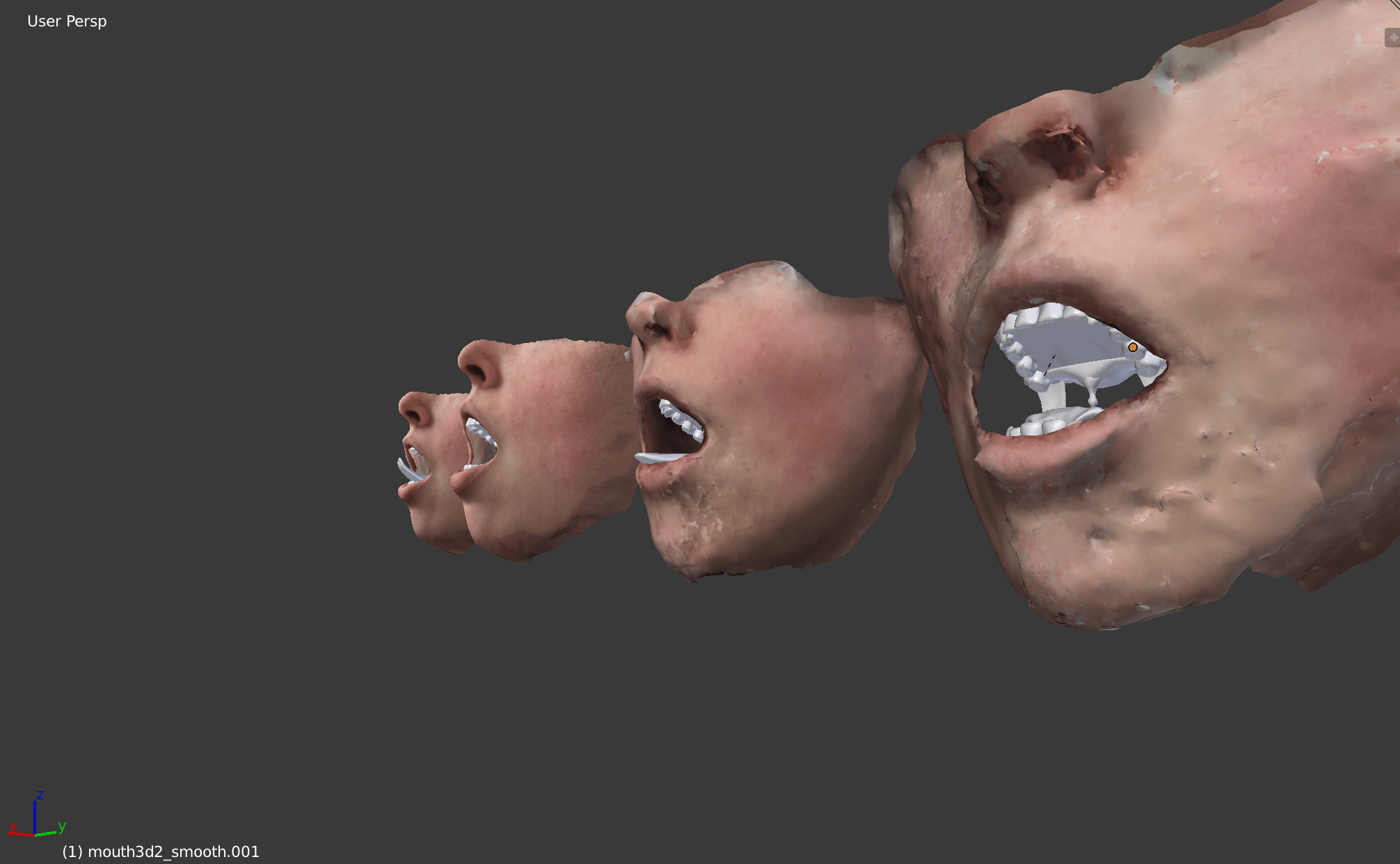

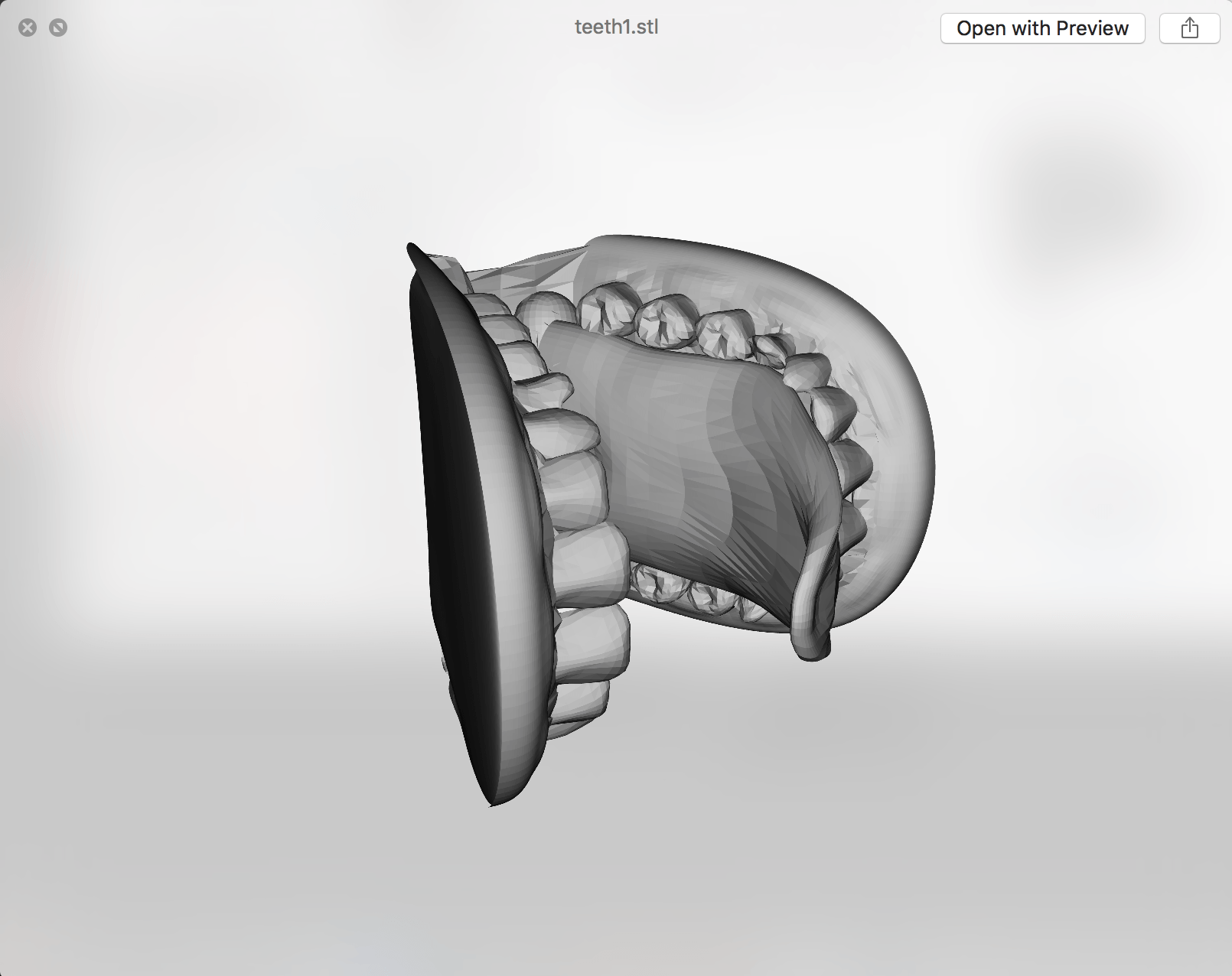

Following on from this I combined each of the three experiments into one scene, where each object is animating different based on the levels input from the audio. This again was initially difficult, I needed to first work out how to have multiple objects and also have them defined separately. This meant naming each uniquely and I did this in a one, two, three format. I then needed to work out how the opacity animation could have a separate material variable applied, as if the transparency is applied to the single material it then adds this to all objects in the scene. This again meant creating a new set of variables and naming them uniquely. The code as such became:

Material:

var material = new THREE.MeshBasicMaterial( { color: 0x00ff00 } );

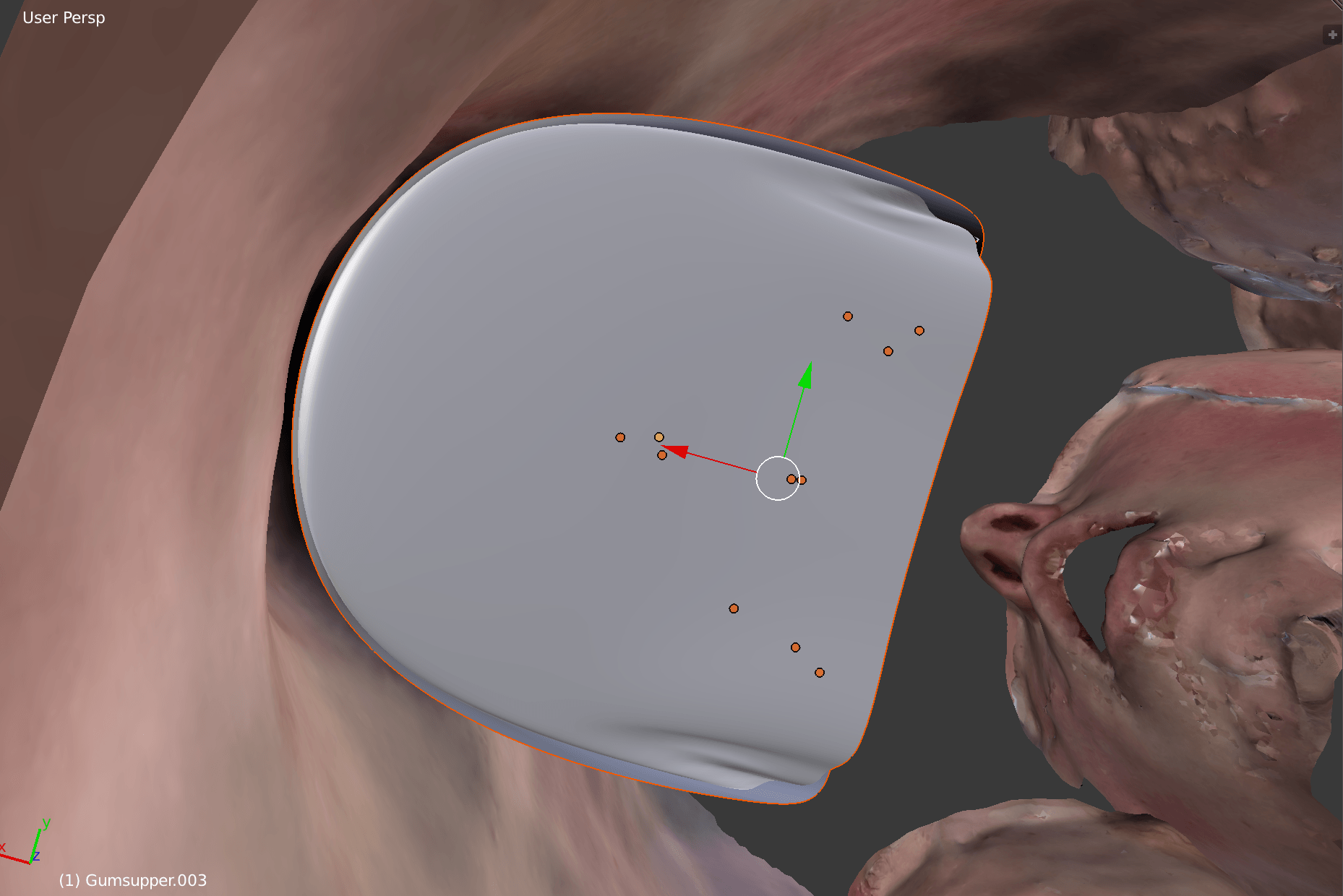

var texture = new THREE.ImageUtils.loadTexture( “mouth3d8_smooth.jpg” );

var material = new THREE.MeshLambertMaterial( { map: texture } );

var materialthree = new THREE.MeshBasicMaterial( { color: 0x00ff00 } );

var texturethree = new THREE.ImageUtils.loadTexture( “mouth3d8_smooth.jpg” );

var materialthree = new THREE.MeshLambertMaterial( { map: texture, transparent: true } );

Object:

var mouthone;

var mouthtwo;

var mouththree;

// instantiate a loader 1

var loaderone = new THREE.OBJLoader();

// load a resource

loaderone.load(

// resource URL

‘mouth3d19.obj’,

// Function when resource is loaded

function ( objectone ) {

objectone.traverse( function ( child ) {

if ( child instanceof THREE.Mesh ) {

mouthone = objectone;

child.material = material;

//child.material.map = texture;

objectone.position.y = – 1;

objectone.position.x = 6;

scene.add( objectone );

}

} );

}

);

// instantiate a loader 2

var loadertwo = new THREE.OBJLoader();

// load a resource

loadertwo.load(

// resource URL

‘mouth3d19.obj’,

// Function when resource is loaded

function ( objecttwo ) {

objecttwo.traverse( function ( child ) {

if ( child instanceof THREE.Mesh ) {

mouthtwo = objecttwo;

child.material = material;

//child.material.map = texture;

objecttwo.position.y = – 1;

objecttwo.position.x = 6;

scene.add( objecttwo );

}

} );

}

);

// instantiate a loader 3

var loaderthree = new THREE.OBJLoader();

// load a resource

loaderthree.load(

// resource URL

‘mouth3d19.obj’,

// Function when resource is loaded

function ( objectthree ) {

objectthree.traverse( function ( child ) {

if ( child instanceof THREE.Mesh ) {

mouththree = objectthree;

child.material = material;

//child.material.map = texture;

objectthree.position.y = – 1;

objectthree.position.x = 6;

scene.add( objectthree );

}

} );

}

);

camera.position.z = 10;

var render = function () {

requestAnimationFrame( render );

if ( mouthone ){

if(level > 0){

mouthone.scale.x = level * 20;

}

}

if ( mouthtwo ){

if(level > 0){

mouthtwo.rotation.y = level * 90;

}

}

if ( mouththree ){

if(level > 0){

materialthree.opacity = level * 20;

}

}

renderer.render(scene, camera);

};

render();

</script>

</body>

</html>